*TLA = Three Letter Acronym.

*TLA = Three Letter Acronym.

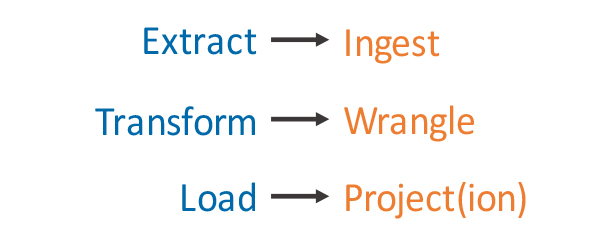

TLAs. The world is full of them. But as processes shift, so too does the language with which we describe them. The process of preparing data for analytical usage is transforming. Gone are the days of writing business requirements, mapping new data feeds from written specifications and handing off to a select bunch of extract, transform, load (ETL) developers.

IT departments are finally cottoning on to the fact that it is far more efficient to place tools in the hands of the subject-matter experts that understand the data domains. The role of IT changes from doers, to that of the enablers: making the right tools available in a secure and robust data ecosystem.

This federated approach reinforces the concept of business data ownership. It brings to the fore the role of data stewardship – prioritising developments that directly support the development of new business use cases. It also aligns better to the agile working methods that many companies are now adopting.

This isn’t to say that we encourage data federation or duplication; we still want to integrate once and reuse many times through the use of data layers across an integrated data fabric.

So, why does the ETL TLA need to change? Let's examine the rationale.

From Extract, to Ingest

Data no longer comes from a relatively small number of on-premises product processing systems – where data was typically obtained in batches according to a regular schedule.

From transactions and interactions to IoT sensors, there are now many more sources of data. As we move to service-oriented and event-based architectures, data is much more likely to be continuous and streamed through infrastructure like an enterprise service bus (ESB). We need to be able to tap into these streams and rapidly ingest, standardise and integrate the source data.

From Transform, to Wrangle

The explosion in the use of data across diverse information products means that data is repurposed many times for each downstream consuming application. The adoption of the ELT paradigm reinforces this point, where data must be integrated with other data before complex transformations can occur.

Data may need to be derived, aggregated and summarised, to be optimised for consumption by use case or application. Creating complex new transformations requires a greater understanding of the data domain. New data-wrangling tools can also help business users accomplish these types of tasks.

From Load, to Project(ion)

We no longer simply load data to tables in a traditional enterprise data warehouse. Most organisations use an analytical ecosystem using open source technologies to supplement traditional data warehouses. The term ‘data projection’ extends the way data may be consumed – as a logical view spanning multiple platforms in the analytical ecosystem, or delivered via an API to a consuming application. Think of a projection as a set of flexible layers to access the data.

Change behaviours, not just vocabulary

The ultimate goal is to accelerate the creation of new information products and improve efficiencies. These new tools are intended to identify and automate commonly used data transformation workflows, based on how business analysts and others typically use the data. When done right, it will result in less work on the IT side, and create more self-service capabilities for business end users.

Historically, an organisation selected a single vendor for data integration tasks. Where software from multiple vendors were necessary, they often didn't play very well together. Building a data integration workbench, running on open source technologies, allows mix-and-match use of best-of-breed tools for different tasks such as ingestion, wrangling, cleansing or managing the data catalogue.

Democratising the creation of information products can act as a real catalyst to drive innovation and deliver superior business outcomes; faster and at a lower cost than the legacy approach.

At Teradata, we constantly evaluate the latest data integration tools and can help you create the right workbench to transform your data management capabilities. For example, Kylo™ – our open source data pipeline ingest and design tool – won Best Emerging Technology at Computing Magazine UK’s annual Big Data and IoT Excellence Awards in June 2017.